Want to close more deals, faster?

Get Pod!

Subscribe to the newsletter or book a demo today.

Thank you for subscribing!

Oops! Something went wrong. Please refresh the page & try again.

Stay looped in about sales tips, tech, and enablement that help sellers convert more and become top performers.

Artificial intelligence is no longer a futuristic buzzword in sales. It’s here, embedded in prospecting, qualification, deal management, and forecasting. Yet for all the hype, many revenue leaders are still asking the same hard question: How do I actually prove AI ROI in sales?

Boards and CROs aren’t satisfied with vague promises of “efficiency gains.” They want causal evidence that AI tools create measurable business impact. That means higher win rates, shorter cycle times, and more revenue. To deliver that proof, sales ops, RevOps, and go-to-market (GTM) leaders need to master attribution, experimentation, and reporting that withstands executive scrutiny.

In this post, we’ll break down the key challenges and frameworks for measuring AI’s impact on GTM, from attribution pitfalls to experimental design, and show how to link technical gains to the business outcomes your leadership team actually cares about.

Sales is a messy, human-driven function. Unlike marketing ad spend, where attribution models are widely accepted, measuring AI ROI in sales is complicated by dozens of uncontrollable variables:

Without proper controls, you risk attributing improvements to AI when they’re really just natural variance.

CROs are trained to question anything that sounds like a “black box.” Anecdotes like “our reps love the AI” won’t convince them. They expect statistical rigor, just like in any financial investment decision. That’s why proving ROI requires clear attribution, causality, and confidence levels.

In digital marketing, A/B testing is straightforward: you serve two ads, track conversions, and compare results. In sales, however, cycles are long, deals are few, and human behavior is messy. Traditional experiments can take months and are hard to prioritize.

Still, there are reliable methods for AI A/B testing revenue impact if you adapt designs to sales workflows.

The gold standard: assign some reps AI tools, and keep a control group without access. Compare their KPIs over time.

Example: A B2B SaaS company gave half its mid-market reps AI-assisted qualification. After 6 months, the AI group showed a 12% higher stage-to-opportunity conversion.

If withholding AI feels politically risky, roll it out in waves by territory or segment. Each wave acts as a temporary control for the next.

When you don’t have enough reps to run proper tests, statistical models can simulate a “control” group based on historical performance.

When presenting results, confidence intervals matter as much as averages. Saying “AI improved win rate by 7% ± 2%” sounds credible, and saying “AI improved win rate by 7%” without confidence intervals raises red flags for CROs.

Think of confidence intervals as the boardroom insurance policy. They show you’ve done the math carefully.

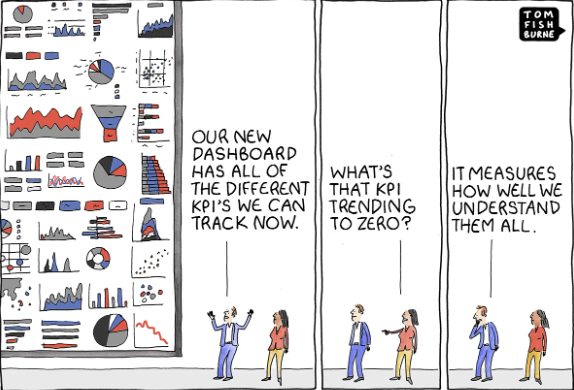

One of the biggest mistakes teams make is reporting AI performance in technical terms only. Phrasing like “model accuracy improved by 5%,” and “latency dropped by 100ms” don’t mean much to a CRO or CFO. Instead, you must link model-level improvements to business outcomes.

If an AI lead-scoring model improves accuracy, show how that translates into more qualified pipeline boosting stage conversion, and fewer wasted meetings lowering the cost of sales.

If AI enables reps to multithread faster or surface buying signals sooner, quantify how that shortens the average sales cycle.

Example: Reducing email drafting latency led to faster customer responses, cutting the cycle by 8 days.

A simple framework CROs understand:

(Δ Win Rate × Avg Deal Size × Number of Deals) – AI Cost = ROI

This makes the translation from “better predictions” to “real revenue impact” explicit.

CROs and boards want clean, standardized reporting—not a data science thesis. Here’s what works.

If you only have anecdotal feedback or activity metrics, label them as leading indicators—not ROI proof.

This is where Pod shines. Unlike generic AI dashboards that stop at activity tracking, Pod directly ties deal-level shifts (e.g., better qualification, stronger multithreading, faster follow-ups) to pipeline movement.

Instead of reporting “AI wrote 3,000 emails,” Pod shows:

That’s the difference between activity metrics and a credible ROI story leadership can take to the board.

Proving AI ROI in sales isn’t about flashy dashboards or vanity metrics; it’s about showing causal, confident links between AI adoption and revenue outcomes. By using solid experimentation design, translating model gains into GTM metrics, and presenting results in board-ready templates, you can build the credibility needed to scale AI across your sales organization.

Pod makes this process tangible by connecting AI-driven deal-level improvements directly to pipeline movement, giving CROs and boards the ROI evidence they demand. Book a demo and learn more about Pod today.